OKRs That Don't Suck

This talk was given at Lean Agile Exchange 2020 on Friday, 11 September 2020.

Objectives and Key Results (OKRs) are awesome! So why do they work so badly for some companies? Why do they often end up as another stick to beat teams with? Adrian will share his experiences of working with OKRs. We’ll walk through ways to create and work with OKRs effectively — and how to do the exact opposite. You’ll leave with a list of common mistakes and anti-patterns to avoid — helping you and your teams create OKRs that don’t suck!

Video

Further Reading

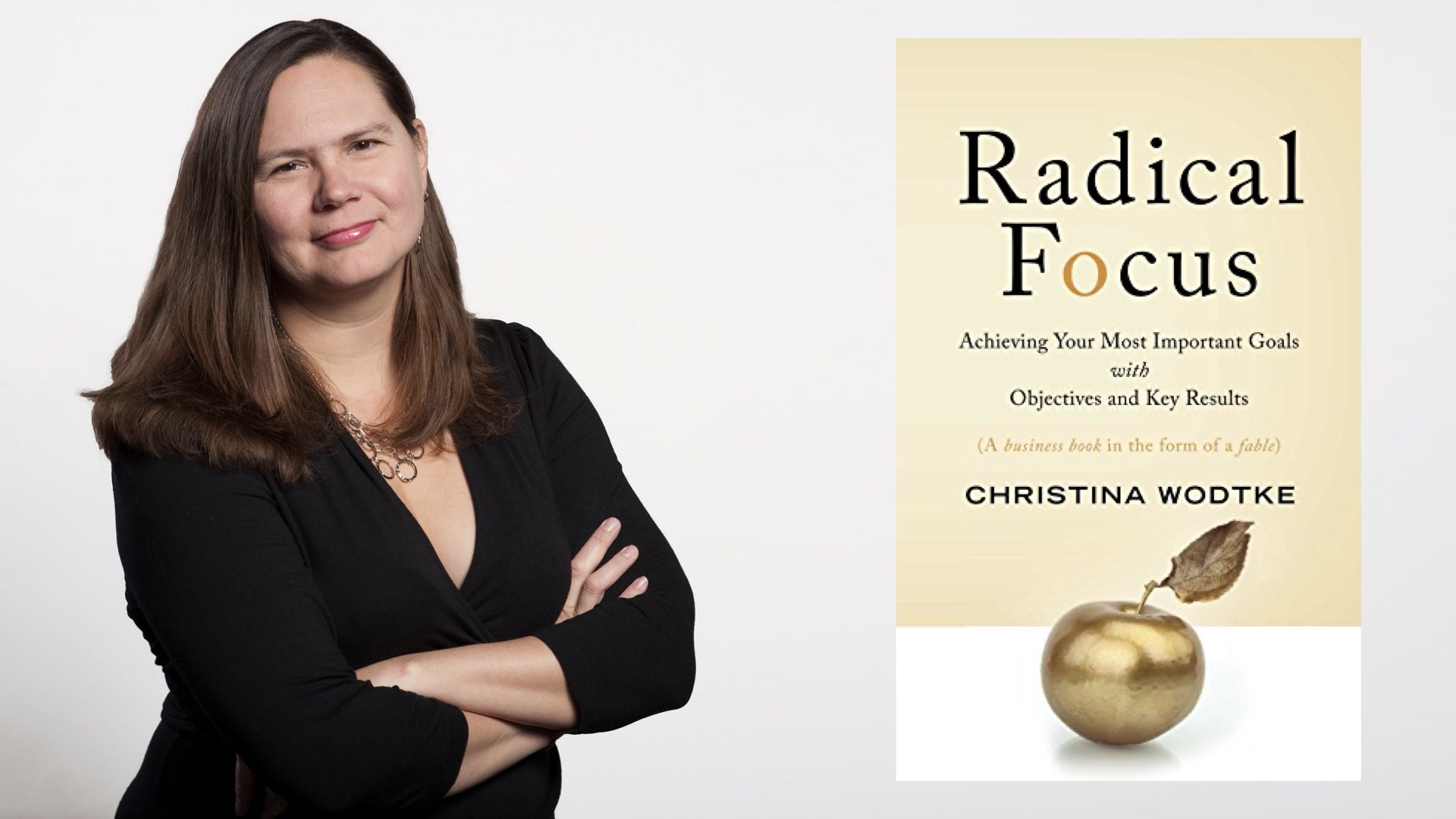

- Radical Focus by Christina Wodtke — my go-to book recommendation for people who are new to OKRs.

- Christina's The Art of the OKR, Redux article is well worth a read too.

- Read Measure What Matters by John Doerr after Christina's book. There's some solid information here — especially if you're in a larger organisation. However…

- … there are some aspects of Doerr's book I've found a bit problematical in practice. This great review of Doerr's book by Felipe Castro covers those issues nicely.

- Information on Goodhart's Law.

Keep in touch

If you liked this you'll probably like our newsletter (No fluff — just useful, applicable information delivered to your inbox every other week. Check out the archive if you don't believe us.)

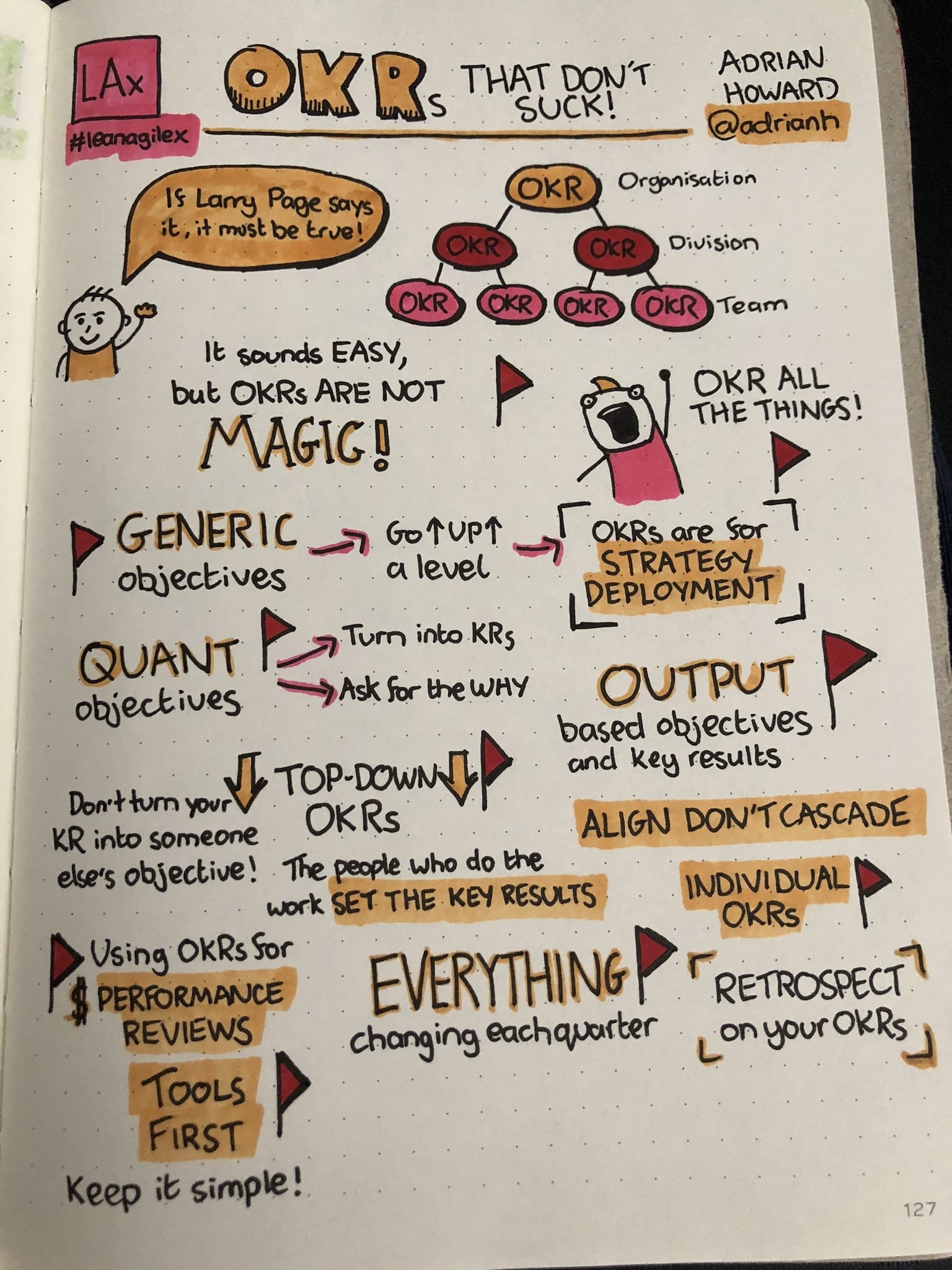

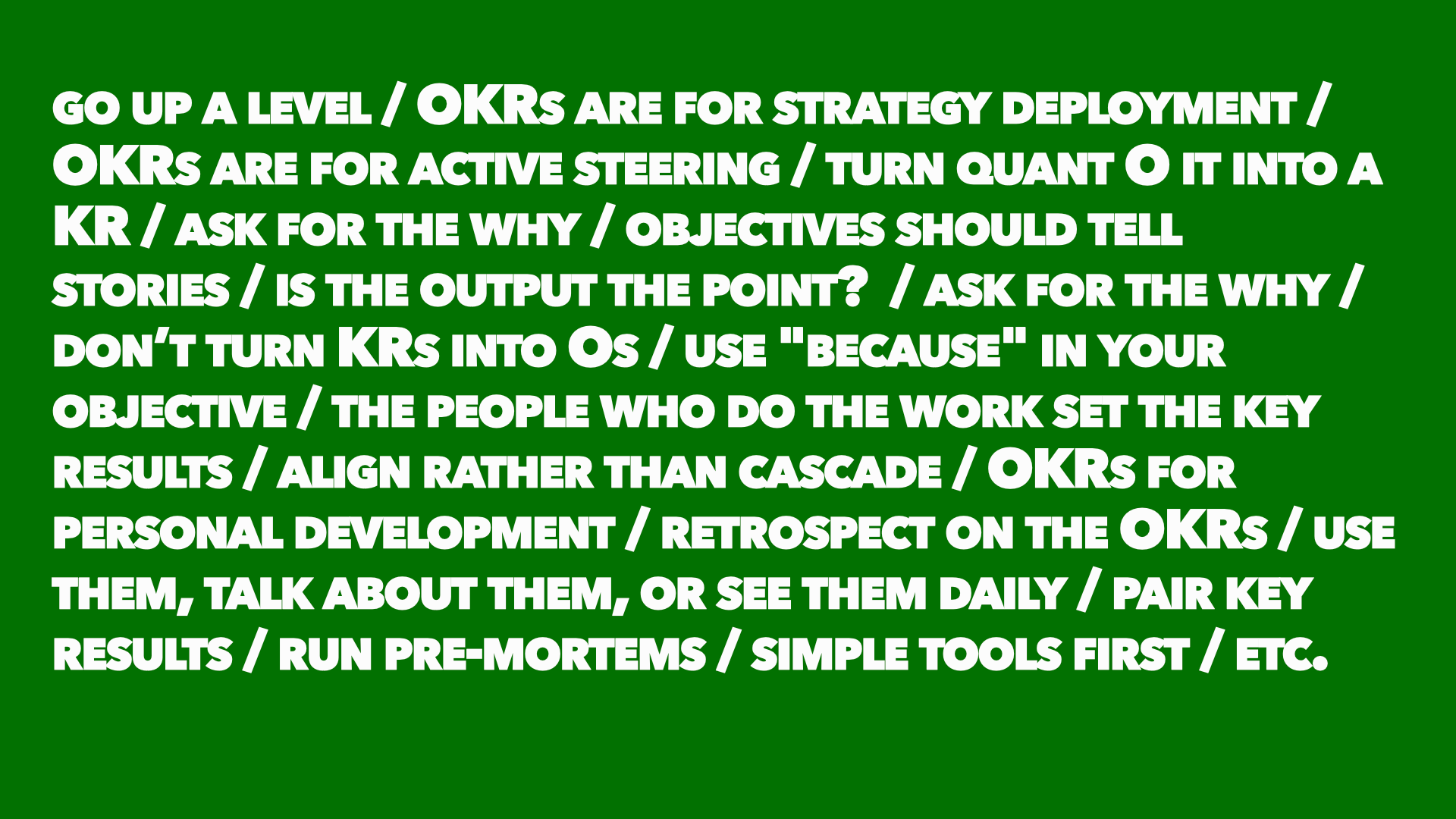

Sketchnotes

Tom Walsh drew some sketchnotes of the session.

Talk Transcript

Hi — I’m Adrian Howard - adrianh on the twitters. These days I spend my time helping organisations solve problems where agile delivery, user experience, and product management overlap.

It's been my enjoyable misfortune to work with several clients in recent years who've been going through the process of adopting OKRs with their agile teams. And it's normally gone kind of something like this…

Everything is awful. Everything is awful. The teams are not aligned. People are building stuff and we don't quite understand why. We're shipping things… sort of… but we're not quite sure if we're shipping the things our customers will love — or need.

And then somebody — usually in upper management — reads an impressive quote somewhere. About how awesome OKRS are.

"OKRs have helped lead us to 10x growth, many times over. They’ve helped make our crazily bold mission of organizing the world’s information' perhaps even achievable. They’ve kept me and the rest of the company on time and on track when it mattered the most"

Well — that sounds awesome! And Larry Page said it so it must be true!? So OKRs are a good idea! It sounds fairly simple!

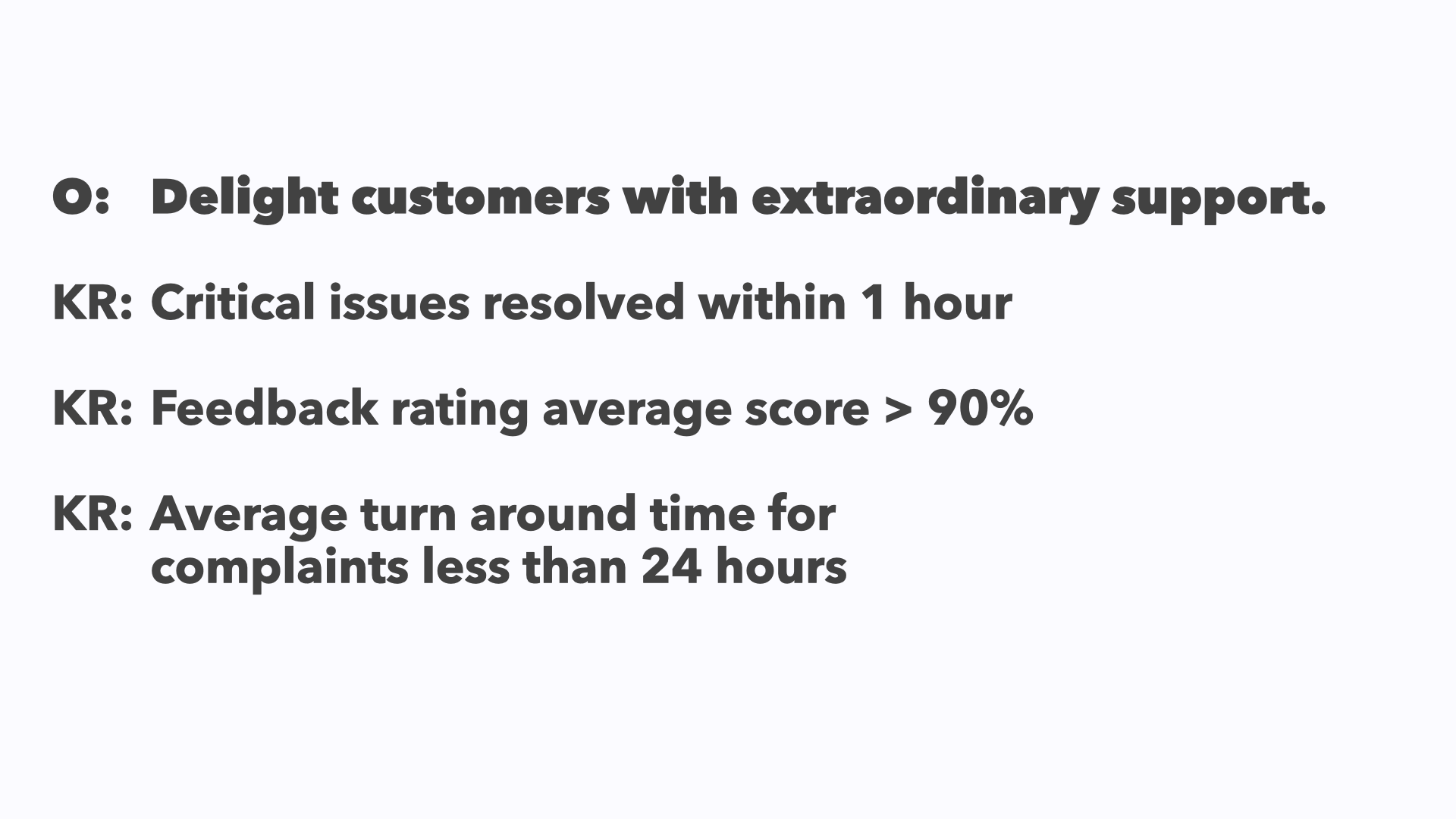

We have a qualitative objective that describes what we want to achieve, and we have some quantitative key results for how we achieve that objective. It makes sense having that out there and in public. That would help people understand and align on what they should be building…

And there's the cascade. With the big strategic OKRs at the top of the company.

Which then cascade down to the departments who deliver on smaller scale OKRs aimed at supporting the company OKRs.

And the departmental OKRs then cascade down to the team OKRs — and the teams do the stuff to meet their OKRs, which then meet the departmental OKRs, which then meet the organisational OKRs. And it's all awesome! This sounds good, this sounds simple, this sounds something that we can adopt…

And then they go Google, "OKR Tools". Talk to a lot of salespeople and buy one…

And then there's that a little bit of a panic, as everyone runs around, trying to set their first set of OKRs. Because no one's done it before. Maybe we didn't brief people and get them into the idea of what they wanted to do early enough. Maybe we forgot that they had to do their day-to-day work at the same time as they were doing this OKR setting exercise. There's a bit of flailing around, but we get it done in the end.

And then, because they quarterly, nothing happens for a while. The product managers or the delivery managers draw pretty graphs from the KRs. And they're fed up to a departmental report deck, which gets summarised into a slide on the monthly C-level status update deck. And at the end of the quarter, things are still…

Fine… Nothing has changed. This is a mild exaggeration, but not a great one. I've been through this process with a few different clients, and they've all had the same pattern of "This sounds really cool and easy. We'll do this thing and it'll be great." And then they fail miserably the first couple of times around. It sucks. So how do we get around this? This is the problem…

They're not magic. They're awesome. I love OKRs. They're great, and they're useful, but they don't magically make your organisation aligned. They don't magically give the organisation a vision if it doesn't have one. They don’t magically make teams autonomous. Just like writing "as a user" on all your user stories does not make you a user centric company. Writing OKRs, does not magically give you a strategy and vision.

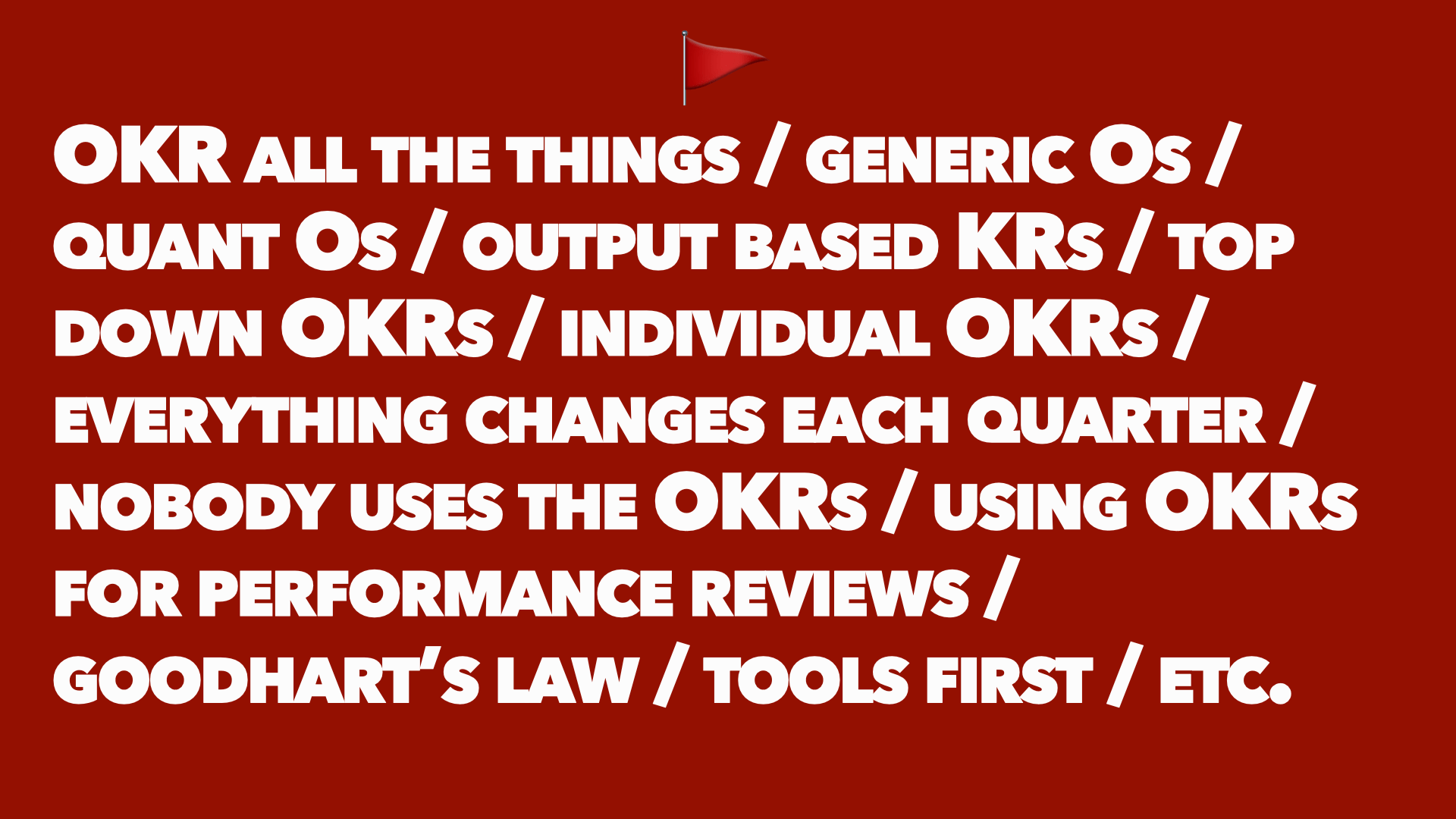

While working with clients I’ve come across some common red flags, which is what we’re going to talk about for the rest of the session. These are just red flags — warnings. I'm not saying these things are always wrong. And if you see any of them your OKR is awful. I'm just saying if you see them all the time, over all your OKRs, then that's probably something you need to look at. They’re a signal that you should be paying attention.

The first and the most common for organisations adopting OKRing all the things. Every single metric that the company has already, now becomes an OKR. Your Net Promotor Score from marketing — now an OKR. The System Usability Scale score that's coming out of the usability testing — now an OKR. Our Monthly Recurring Revenue — that's an OKR. Every Key Performance Indicator — now an OKR, and you get this huge swathe of them that completely fail to give any direction to the organisation. They’re also often quite generic…

For example at several different companies I’ve seen an OKR that, was basically “X times more money in Y months”. Which is like, “Oh, a company wants to make more money! Shocking! What a fascinating piece of strategic insight!” It doesn't give you direction. It doesn't tell you the why. The fact that I’ve seen OKRs like this at multiple companies tells you that it’s not providing any kind of strategic vision. Unless you think they all had the same strategy. So if you see “OKR all the things” or “Generic OKRs” as your OKR process starts rolling out try to go up a level…

Why do you want to increase the Monthly Recurring Revenue? What will a better Net Promoter Score get you? What's your strategic reason for increasing your product’s usability? Are we creating a new market, or expanding into an existing market, or rolling out a new feature set, or expanding the platform so it supports a new bunch of customers? What's the thing that we're trying to do? …

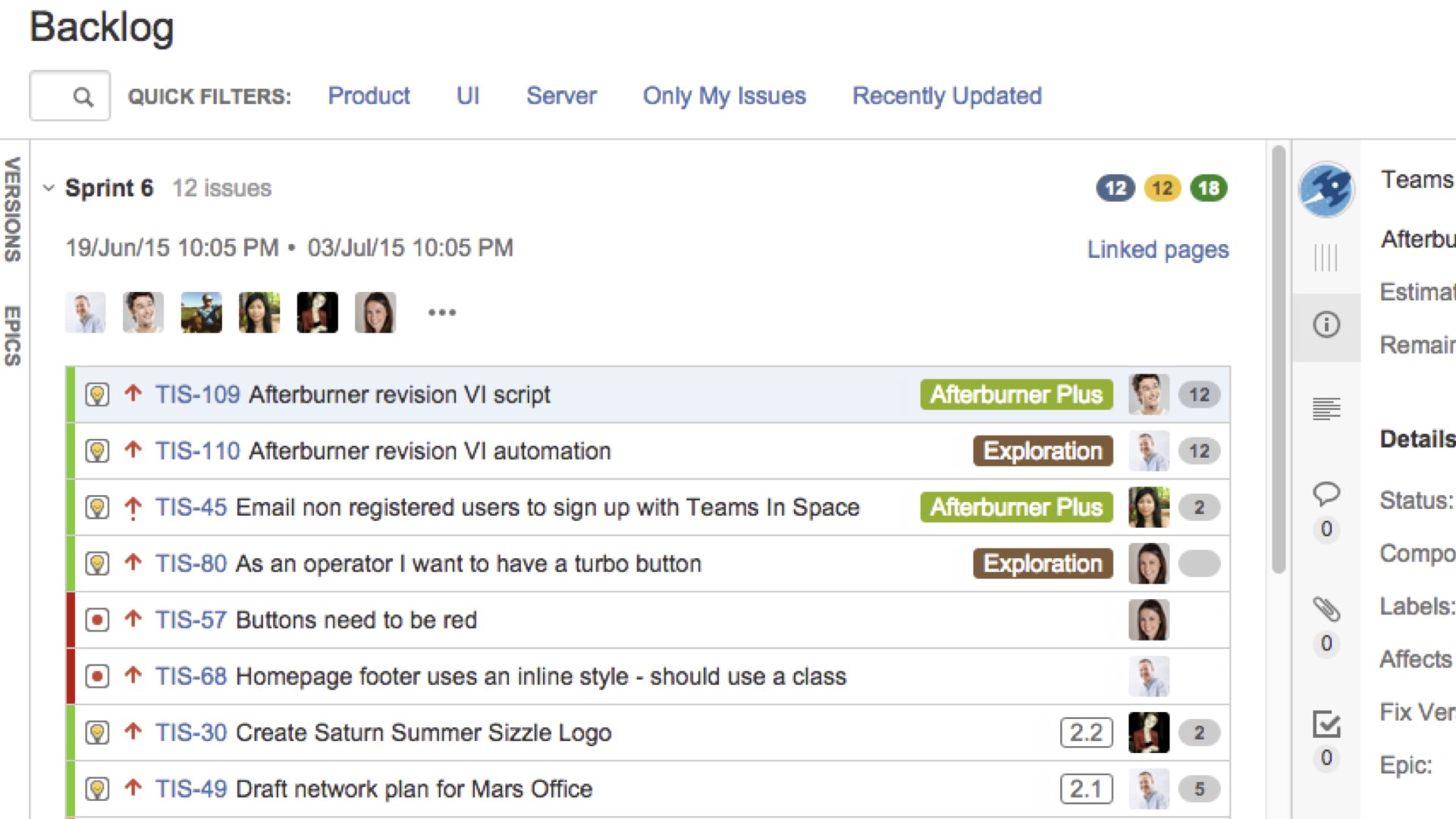

Because at their best OKRs are a form of strategic deployment. Most company have some kind of vision statement. Something that lets the organisation know what it’s trying to achieve. But most of the time nobody looks at it. Most of the time people end up staring at things like this.

Because the vision isn’t actionable. It describes what folk want to do — but not how to do it. On the other hand many backlogs are just talking about what we’re doing — not the why.

And this is where strategic deployment comes in. I love this quote from Melissa's book

"Strategy deployment is about setting the right level of goals and objectives throughout the organization to narrow the playing field so that teams can act."

The "right level of goals and objectives". So you can “act”. So you can actually do something. An objective of “make more money” isn’t the right level. It doesn't give you a how or a why. It’s not going to change how you act. It doesn’t constrain you enough for there to be a direction in your approach. On the other hand for a SaaS company that currently only supports American users an objective like “Enter the Asia-Pacific region" just might. OKRs are a kind of strategy deployment…

Another way of thinking about it is this: Unless you can actively steer what you're doing on a day to day basis, and on a month to month basis, with the OKRs then they’re not useful. If you give a dev team an objective of “Sign up customers in the Asia-Pacific region” — but don’t allow them to decide what features to ship, or do research on customers in that region, or hire somebody with internationalisation & localisation experience, then they have no reasonable way to achieve that objective. They don't have power, or influence, or control over that number. Good OKRs need to be specific enough to give direction, and actionable by the group tasked with achieving the objective.

Quantitative objectives are another common red flag. When you're just talking about a number. When the objective is "increase monthly recurring revenue by 20%". That's not a strategy. That's just something you'd like to happen. Your strategy is how are you going to get that thing that you would like to happen? There’s a reason that OKRs are not just called “KRs”! So when you start seeing quant objectives…

Turn them into key results. That's what key results are for! They're the measures and then…

Start asking the why. Why do we want to increase Monthly Recurring Revenue? How do we think we're going to do that? Are we going to be exploring a new market? Are we giving new features to existing customers? Are we charging more? What's the strategy? That's what the O of the OKRs should be summarising…

The Objective should tell a story. We want to engage in this new market. And we're going to measure that we engage in this new market by getting these five key customers signed up. And by getting so much percentage of our profits from it, and we're rolling out this new feature set that this market needs. Those are things that tell the stories behind “increase monthly recurring revenue by 20%”.

Output based OKRs are a similar problem. Folk have a nice objective of expanding into a new market. And then the key results are just a checklist of shipping milestones 1, milestone 2, milestone 3, and so on. That’s just a list of things! It doesn’t tell you a story. It doesn't let you steer. If you don't deliver the first milestone what do you do instead? It doesn't help you make strategic decisions if milestone 2 turns out to be impossible. The only kind of exception to this is when the output is the point…

For example, I worked with a team, and one of their key results was "we will ship working software, every sprint", which kind of sucks as a general key result. But for this team who hadn't been shipping software for a long, long time, it wasn’t. Not entirely their fault. There were infrastructure and operational issues involved in this as well. But that was a major stretch goal for them. And it was a blocker for a bunch of other strategic work. So in that particular instance, the output was the point and that was great. But in general when I start seeing lots of output based KRs…

I start asking for the why again. Why do we want to ship feature one, feature two and feature three? How do we measure whether feature one, feature two and feature three, are achieving their strategic goals? Let's start looking at those things instead. Rather than shipping the new signup feature, can we look at how many people signed up? Because that's the thing we're trying to make happen. Rather than ticking off “shipped the new data pipeline”, can we look at how many of our customers are using the new data pipeline rather than the old data pipeline? Because that's the thing we're trying to actually achieve. Which leads me to…

Top down OKRs. When your boss tells you what the department OKRs are going to be, and what the team OKRs are going to be, and what the individual OKRs are going to be. This, not to put too fine a point on it, sucks. Copying and pasting a top level key result and making it somebody else’s objective is mostly useless because it becomes divorced from the story. It takes away the strategy, and just leaves the metric.

Don't turn a Key Result into somebody else’s Objective.

If somebody does — add “because” to the end and add in the objective it came from. If you get handed your bosses key result of “Critical issues resolved within 1 hour” turn it into “Critical issues resolved within 1 hour because we want to Delight customers with extraordinary support”. Then it becomes obvious that doing something like “changing what resolved” means isn’t going to help you! What's the “because”? What’s the “why”? Then you can start building KRs around that objective…

It's a team job, not an individual job. You need the insights from everybody involved in achieving the OKR to know whether it makes sense.

A rule I’ve found really useful is “the people who are doing the work to meet an objective are the ones who get to set the key results.” So if my boss says this is the objective for our team he doesn’t get to set the KRs. The team does. We’re the ones who get to say that we can measure progress on that objective by look at these things. Then you can have a useful conversation and discussion around whether those Key Results work, and whether that Objective is feasible — rather than it being dictated from above. You end up with a more realistic chance of having a set of objectives and key results that are achievable, approachable, and that the team and organisation has bought into…

You're looking for alignment rather than a cascade…

Another symptom of that cascade going wrong is when the cascade carries on past the team level down to individuals. For me, that's an anti-pattern. It almost always works badly…

Because most work in the product space individual goals, outcomes & objectives have a different cadence from the longer strategic work. Individual goals & outcomes change all the time as the team as a whole discovers what works best to achieve their outcomes. As GDS folk are fond of saying “The unit of delivery is the team”. As soon as you start cascading team OKRs to individuals you either end up continually changing them as work at the team level causes people to change their focus, or even worse you end up with individuals sticking to their no longer relevant individual OKRs — because somebody in the org is judging them on those results — which then get in the way of achieving the organisational objectives. So just avoid them if you can…

That’s not to say OKRs can’t be really useful for personal development — as long as it’s not cascading down from higher level team and organisational objectives. Having a personal OKR to “get better at automated testing” or “Learn React” are fine. Having a way to talk about personal objectives and accountability for those objectives is super useful. It’s trying to push team and organisational objectives down to the individual contributor level that tends to fall over.

The problems with individual OKRs — or indeed with group OKRs — are especially pernicious if you tie them tightly to performance reviews and incentives. Because it makes it more likely that people will game the KRs — either consciously or unconsciously. It also frames not achieving an OKR as a lack of effort. Which tends to stop people asking really important questions. For example:

Did we have a “Black Swan” event – something that we couldn’t predict. I’m sure Zoom’s targets have been massively exceeded during 2020. I’m equally sure that restaurants and theatres are having a terrible time this year. Is that because they delivered really well on their strategy — or really badly? Or was it because we’re having a pandemic.

Was the strategy wrong? Was our Objective tied to a high level strategy that just turned out to be incorrect. Invalidating a bad strategy early is a good thing. Maybe nobody wants our product in the Asia-Pacific region? If we only tie rewards to OKRs succeeding it makes discovering bad strategies early harder.

“Did we set the right objective?” Sometimes the thing we discover from an OKR going really well or really badly is that we were trying to achieve the wrong objective. Maybe a black swan event means we need to switch our Objective ASAP! Having incentives tied to OKR success makes it much harder to have these conversations.

Did we set the right Key Results? Sometimes the thing we discover from an OKR going really well or really badly is that we were measuring progress towards our objective with the wrong key results. Again — having incentives tied to OKR success makes it much harder to have these conversations.

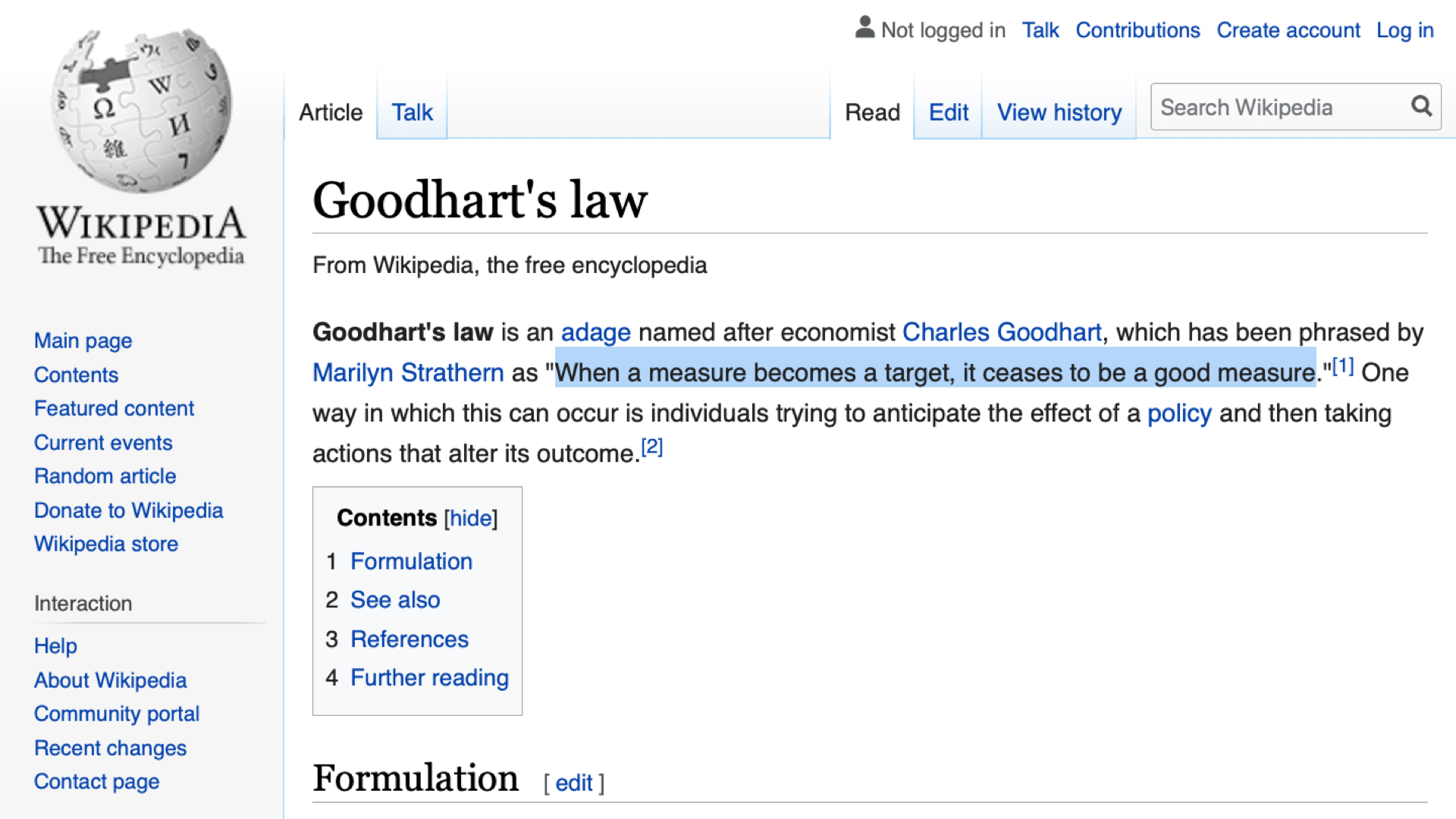

Goodharts Law is one way to think about this.

If you've not come across it already. "When a measure becomes a target, it ceases to be a good measure.". Basically people game measures. Either consciously or unconsciously. Especially when rewards and punishments are attached to achieving those measures. And OKRs are just as susceptible to this as anything else. If an objective about expending into a new market has a key result on subscriber numbers, we probably don’t want those new subscriptions driven by “get a free puppy with every subscription” — because puppies are expensive. And it’s inhumane. So — what can we do to avoid this…

One easy method is to pair key results. When you have one key result that is a proxy for things you want: “more subscribers” for example, pair it with something else that reflects the possible bad consequences of that metric going up: “cost per-acquisition” for example. That way we can rapidly see that “free puppy for every new subscriber” will rapidly result in soaring acquisition costs and everybody can see something… interesting is happening.…

Pre-mortems are another useful method to help you come up with different options and ideas for what your key results could be. Pre-mortems are an activity to surface the ways a project might fail before it starts. You ask the team "It’s six months in the future and everything has gone horribly wrong". What do we see in the world? What effects do we see? How do we know it’s gone wrong? Why?” (I’m fond of having a second round where you ask the opposite question: “It’s six months time, and everything is gone awesome. What do you see in the world? What effects do you see?”)

The answer to those questions will give you lots of information about the things that are interesting to measure and look for in your KRs. For example a pre-mortem scenario of ”We spent a lot of money to acquire customers, but they didn’t stay on our platform” can turn into KRs about customer churn & cost of acquisition.

Another symptom of incentives hurting people is Everything Changing Each Quarter. Every quarter there are a completely new set of OKRs pushing people to do completely different things. As I keep repeating — OKRs are about strategy. Unless you’re a startup your strategy probably shouldn’t be changing that fast. If everything is changing all the time you aren’t talking about your objectives at the right level of granularity. When folk are rewarded or punished based on whether they achieve their OKRs people look for something to measure that will look good, rather than help the company. So if you see everything change, think hard about whether that’s actually a reflection of your strategy changing or something more problematic…

You need to retrospect on those OKRs. Why did we choose this? What went wrong? What went well? Look at all the different reasons for achieving and understanding those objectives, and those key results. Did we measure the right thing? Did we look at the right objective? Has the objective changed? Why has the objective changed? How did we use the OKRs? What decisions did we make using the OKRs? All of those questions are really useful. For example one problem that often pops up is…

Nobody actually using the OKRs. That’s a sign there's something wrong either with the OKRs, or how they're being worked with among the team…

Ideally, you should be using them talking about them or seeing them on a daily basis. And this feels odd to some teams I've worked with…

But if you've got it on the wall, then you see them walking by every day…

OKRs should be useful when you’re planning and prioritising your work. They are, after all, a direct expression of what you’re trying to achieve. So they should be referenced where you’re trying to understand what thing to do first and why…

OKRs should be useful in retrospectives & reviews. Did we achieve the thing that we wanted to do over the last few weeks? Did we move the KRs in the way that we expected them to? Why? Why not? If you're using OKRs for planning & prioritisation & retrospectives then you should be using them almost on a daily basis. When I see teams only looking them once a month, or just before the quarterly exec update rolls around, that means the OKR itself isn't useful to them. So the layer of abstraction is wrong or they don't have control over it. Which is why you need to run retrospectives on the OKR process too.

Tools were another barrier to effective OKRs that popped up in retrospectives. Especially people taking a tools first approach. The folk who googled "OKR tool” to find the OKR plugin to their existing project management software, or the tool that Popular Company is using. The problem is that everybody I know who adopts OKRs changes and adapts their ways of working as they go.

How they work with OKRs in that first quarter is very different from how they work with them in their fourth quarter — because they've learned stuff: They're understanding constraints. They're figuring out how to have to work their process better. If you buy a tool in week one, you're bought the wrong tool for month eight. In fact, you've often constrained yourself to work in a certain way because that's the way the tool works — and that doesn't help you discover what is or isn't working about your OKRs…

So I'm a very strong proponent of simple tools first. Whatever is, cheap and simple, and everyone knows how to use, and everyone has access to. Use a spreadsheet. Use a whiteboard. Use something that you can tweak and change and evolve. And yes, maybe in three or six months time you'll be buying a tool. But you'll be buying the right tool rather than the tool that was sold to you best when you didn't know what you wanted…

We’ve talked about a lot…

Remember what OKRs are for. OKRs are about deploying a strategy to the rest of your organisation. So they need to be something that is relatable, understandable and leads the organisation to do the things that the organisation wants to be doing.

Melissa's build trap book is not about OKRs, but it has some really useful thinking about vision and strategy and the different levels in an organisation where you think about those things. The language of an OKR at the c-suite level is going to be different from the language of an OKR at the product team level.

Second: retrospect on your OKR process all the time. Not just once a quarter. Did we set the right objective? Did we measure the right things with our key results? Did we meet our objective or not? Sometimes people find we’ve met the objective even though they didn't meet their key results. Or they've not really achieved their objective, despite acing all of their key results.

Those are really interesting bits of learning — which is why it's a terrible idea to use OKRs as a way to do performance reviews or tell individuals or teams, whether they've been successful or not. Because, a lot of the time, you'll see really interesting useful-to-the-organisation learning coming out of OKRs that have — in scare quotes — "failed". Because you've discovered “the market doesn't actually want this product” or “people's price point is different from what we expected it to be” or “that customer pain point is not the blocker for adoption we thought it was”. OKRs can help us discover is that our strategy is wrong. Not the people doing the work to achieve that strategy weren’t trying hard enough…

This is a small list of anti patterns. I've seen more, but we only have 30m! All of these have come out of retrospectives on an OKR process with clients. Where our OKR process fell down — we looked for fixes. And where it worked well — we looked for ways to turn up that success.

And that's the thing that makes it work. It’s not magic. Just filling in an OKR template is not going to get you a successful OKR practice. Using an OKR tool is not going to get you a successful OKR practice. Using OKRs are not going to magically supply your organisation with a good vision and strategy if you don’t already have one.

This is one of the reasons I thoroughly recommend Christina Wodtke's book “Radical Focus”. It’s very much focused on the team level adoption of OKRs. It talks about a team that's adopting OKRs, how it messes up, and how it learns from messing up to get it to better place. Buy it & read it.

You can find this video, and a transcript, and some links to the books I’ve mentioned and some further resources at quietstars.com/lax2020

And if you're only going to take one thing away from today — remember that OKRs are not magic. They’re a really useful way to help your organisation align around strategy — but you need to do the work to make them effective. What is going to get you a good OKR practice is repetition, learning, and practice. Not filling in an OKR template or buying a tool.

Thank you.

Well… that’s the end of the recorded bit… shoo! I’m in the slack now if you have any questions — and I’m around for the rest of the conference. Do remember to fill out your feedback forms! Thanks for listening!